|

I am a Ph.D. student at the Center on Frontiers of Computing Studies at Peking University, where I have been advised by Prof. He Wang since 2022. Prior to this, I earned my M.S. degree from NUDT, under the supervision of Prof. Kai Xu. I received my B.Eng. degree from Shandong University. My research goal is to develop intelligent and practical robots to enhance people's daily lives. My current research focuses on building intelligent navigation robots based on vision-language models. I am also interested in scene reconstruction and understanding. Email / Google Scholar / CV / Github |

|

|

[2025/10/16] Uni-NaVid has been released! Check the VLA model (finetuning) and Evaluation code! *: equal contribution; †: corresponding author(s) |

|

Jiazhao Zhang*, Anqi Li*, Yunpeng Qi*, Minghan Li*, Jiahang Liu, Shaoan Wang, Haoran Liu, Gengze Zhou, Yuze Wu, Xingxing Li, Yuxin Fan, Wenjun Li, Zhibo Chen, Fei Gao, Qi Wu, Zhizheng Zhang†, He Wang† ICLR 2026 Paper / Project page We introduce a cross-embodiment and cross-task Navigation Foundation Model (NavFoM), trained on eight million navigation samples that encompass quadrupeds, drones, wheeled robots, and vehicles, and spanning diverse tasks such as vision-and-language navigation, object searching, target tracking, and autonomous driving. |

|

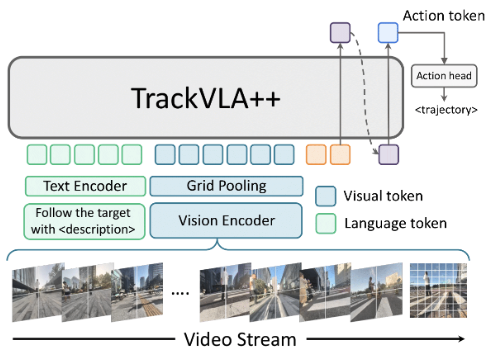

Jiahang Liu*, Yunpeng Qi*, Jiazhao Zhang*, Minghan Li, Shaoan Wang, Kui Wu, Hanjing Ye, Hong Zhang, Zhibo Chen, Fangwei Zhong, Zhizheng Zhang†, He Wang† ICRA 2026 Paper / Project page TrackVLA++ is a novel Vision-Language-Action model that incorporates spatial reasoning and target identification memory, enabling superior performance in both long-horizon and highly crowded tracking scenarios. |

|

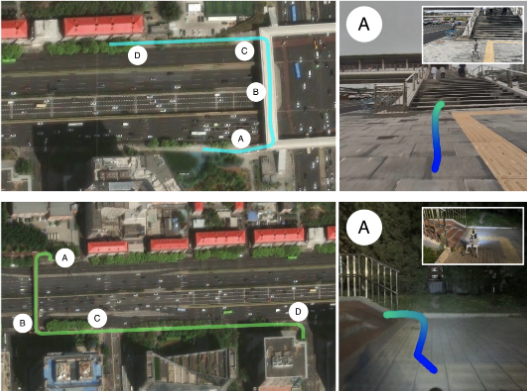

Anqi Li*, Zhiyong Wang*, Jiazhao Zhang*, Minghan Li, Zhibo Chen, Zhizheng Zhang†, He Wang† ICRA 2026 Paper / Project page UrbanVLA is a route-conditioned Vision-Language-Action model for urban micromobility. It aligns noisy navigation-tool routes with visual observations to enable scalable, long-horizon navigation. Trained via a two stage pipeline including SFT and RFT, UrbanVLA outperforms baselines by over 55% on MetaUrban and achieves robust real-world navigation across 500m+ routes. |

|

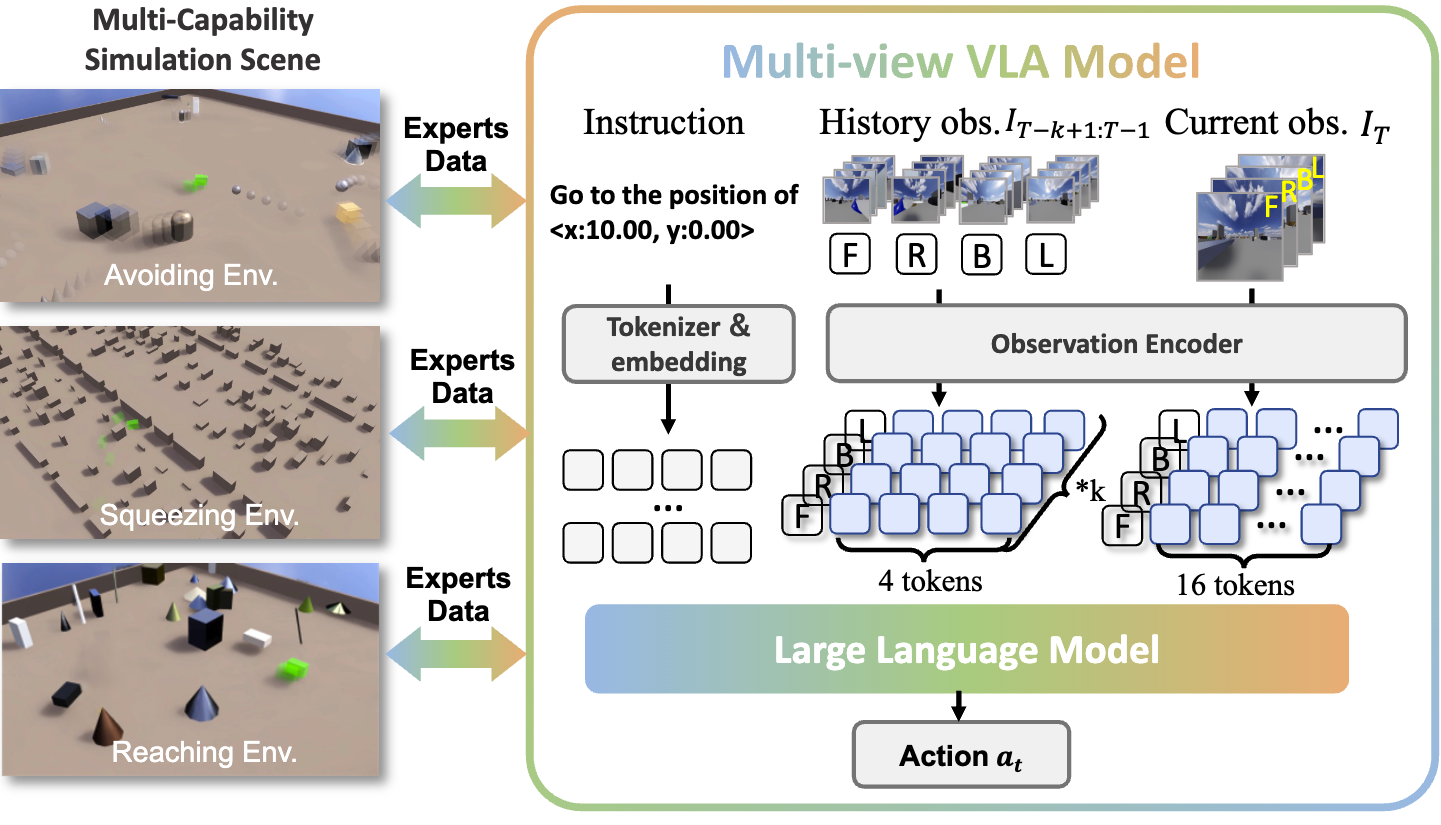

Tianyu Xu*, Jiawei Chen*, Jiazhao Zhang*, Wenyao Zhang, Zekun Qi, Minghan Li, Zhizheng Zhang†, He Wang† Arxiv Preprint Paper / Project page We present MM-Nav, a multi-view Vision-Language-Action (VLA) system with 360° observation, built upon pretrained large language models and visual foundation models. The model is trained on large-scale expert navigation data collected from three reinforcement learning (RL) agents. |

|

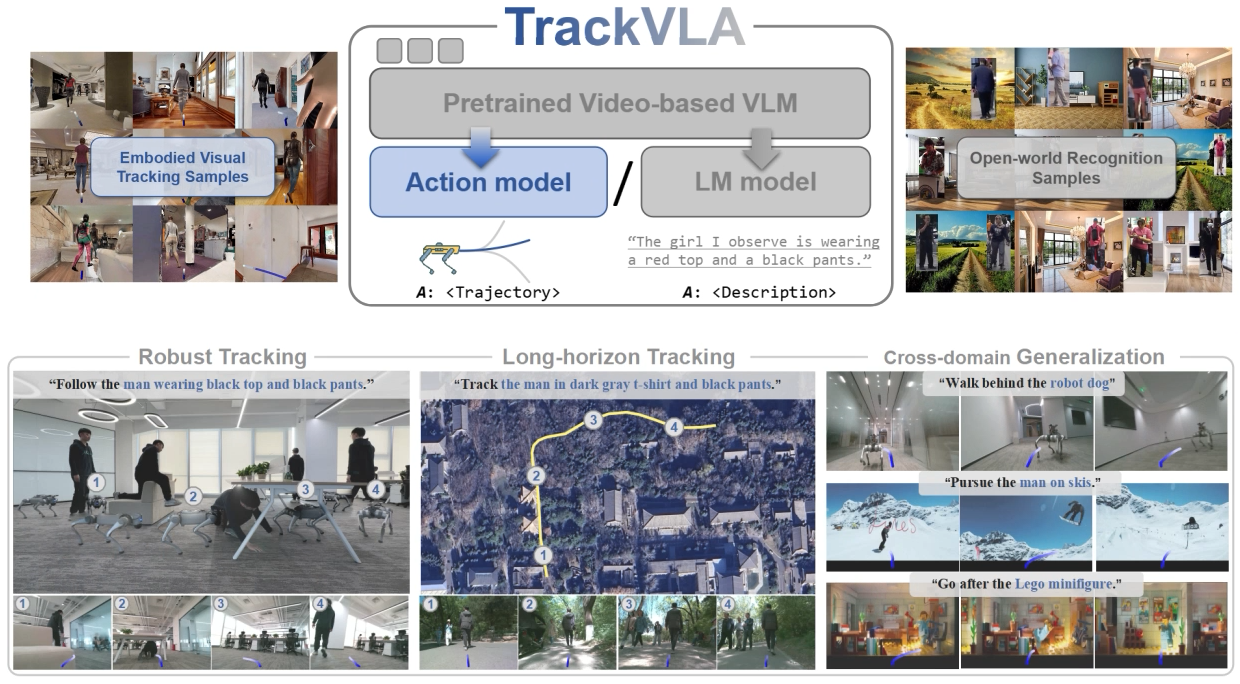

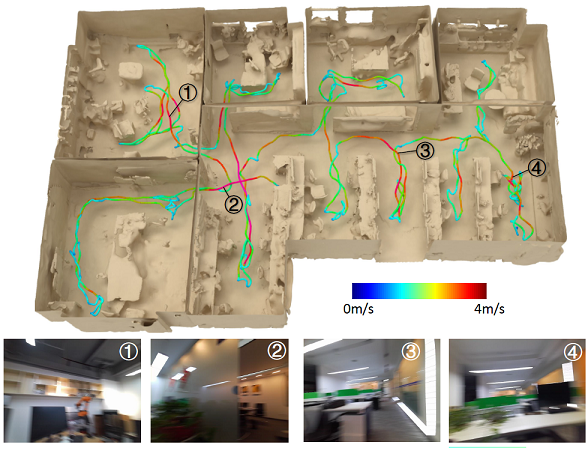

Shaoan Wang*, Jiazhao Zhang*, Minghan Li, Jiahang Liu , Anqi Li, Kui Wu, Fangwei Zhong, Junzhi Yu, Zhizheng Zhang†, He Wang† CoRL 2025 Paper / Code / Project page TrackVLA is a vision-language-action model capable of simultaneous object recognition and visual tracking, trained on a dataset of 1.7 million samples. It demonstrates robust tracking, long-horizon tracking, and cross-domain generalization across diverse challenging environments. |

|

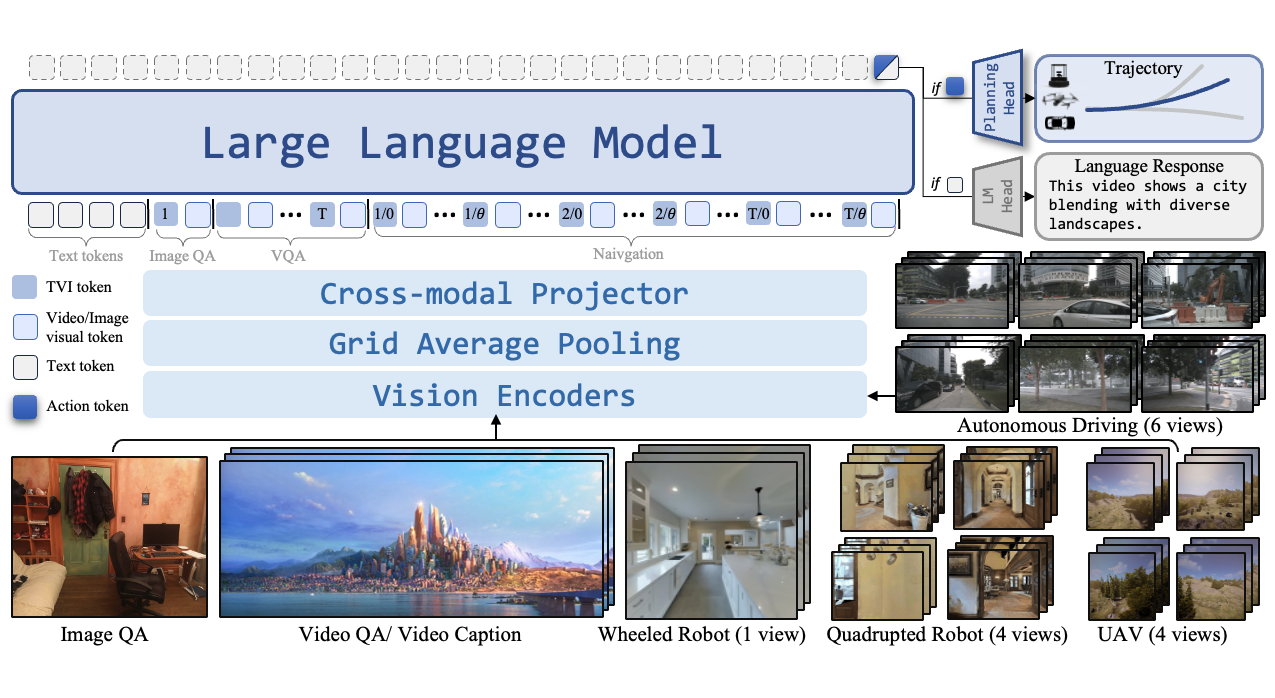

Jiazhao Zhang, Kunyu Wang ,Shaoan Wang ,Minghan Li ,Haoran Liu ,Songlin Wei, Zhongyuan Wang ,Zhizheng Zhang† ,He Wang† RSS 2025 Paper / Code / Project page We present Uni-NaVid, the first video-based vision-language-action (VLA) model designed to unify diverse embodied navigation tasks and enable seamless navigation for mixed long-horizon tasks in unseen real-world environments. |

|

Haoran Geng*, Feishi Wang*, Songlin Wei*, Yuyang Li*, Bangjun Wang*, Boshi An*, Charlie Tianyue Cheng*, Haozhe Lou, Peihao Li, Yen-Jen Wang, Yutong Liang, Dylan Goetting, Chaoyi Xu, Haozhe Chen, Yuxi Qian, Yiran Geng, Jiageng Mao, Weikang Wan, Mingtong Zhang, Jiangran Lyu, Siheng Zhao, Jiazhao Zhang, Jialiang Zhang, Chengyang Zhao, Haoran Lu, Yufei Ding, Ran Gong, Yuran Wang, Yuxuan Kuang, Ruihai Wu, Baoxiong Jia, Carlo Sferrazza, Hao Dong, Siyuan Huang, Koushil Sreenath, Yue Wang†, Jitendra Malik†, Pieter Abbeel† RSS 2025 Paper / Code / Project page |

|

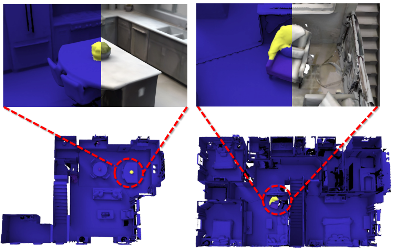

Jiazhao Zhang*, Kunyu Wang* ,Rongtao Xu* ,Gengze Zhou ,Yicong Hong ,Xiaomeng Fang ,Qi Wu ,Zhizheng Zhang† ,He Wang† RSS 2024 Paper / Code / Project page NaVid makes the first endeavour to showcase the capability of VLMs to achieve state-of-the-art level navigation performance without any maps, odometer and depth inputs. Following human instruction, NaVid only requires an on-the-fly video stream from a monocular RGB camera equipped on the robot to output the next-step action. |

|

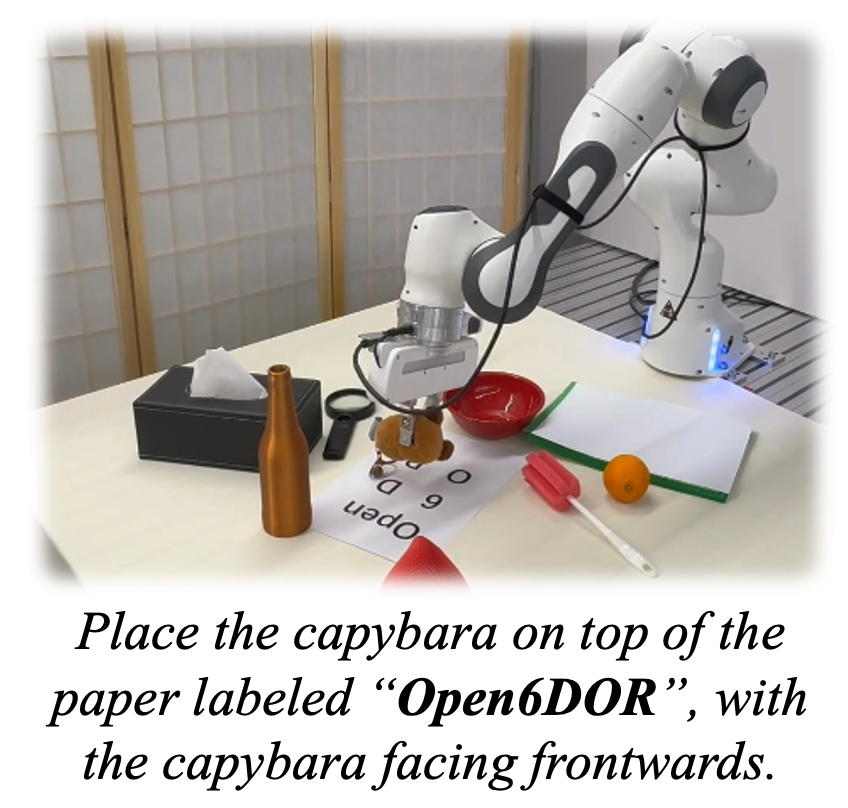

Yufei Ding*, Haoran Geng*, Chaoyi Xu, Xiaomeng Fang, Jiazhao Zhang, Songlin Wei, Qiyu Dai, Zhizheng Zhang, He Wang† IROS 2024 Code / Project page We present Open6DOR, a challenging and comprehensive benchmark for open-instruction 6-DoF object rearrangement tasks. Following this, we propose a zero-shot method, Open6DORGPT, which achieves SOTA performance and proves effective in demanding simulation environments and real-world scenarios. |

|

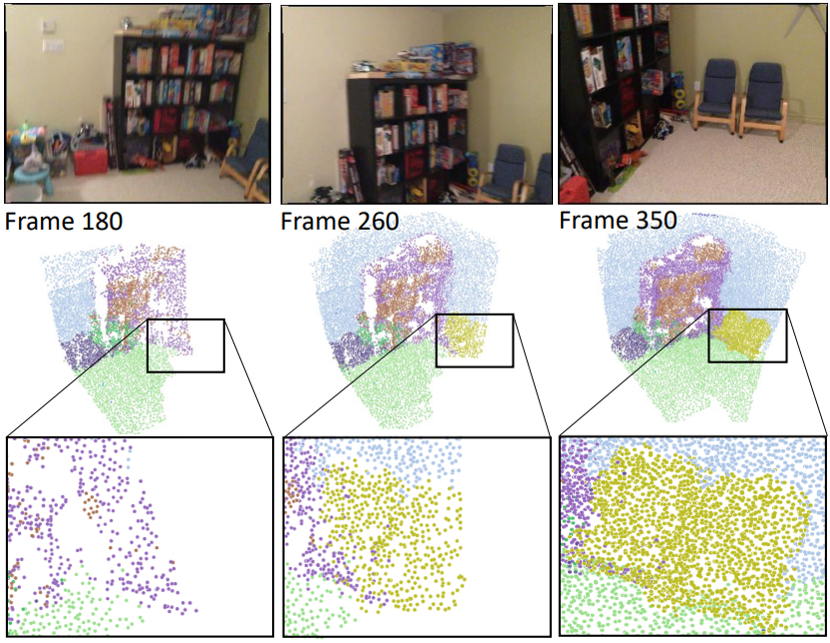

Mi Yan, Jiazhao Zhang, Yan Zhu, He Wang† CVPR 2024 Paper / Code / Project page We propose a robust zero-shot 3D instance segmentation method that leverages the 3D view consensus of 2D candidate masks. Our method can integrate with a 2D visual foundation model (e.g., CLIP) to achieve open-vocabulary 3D instance segmentation. |

|

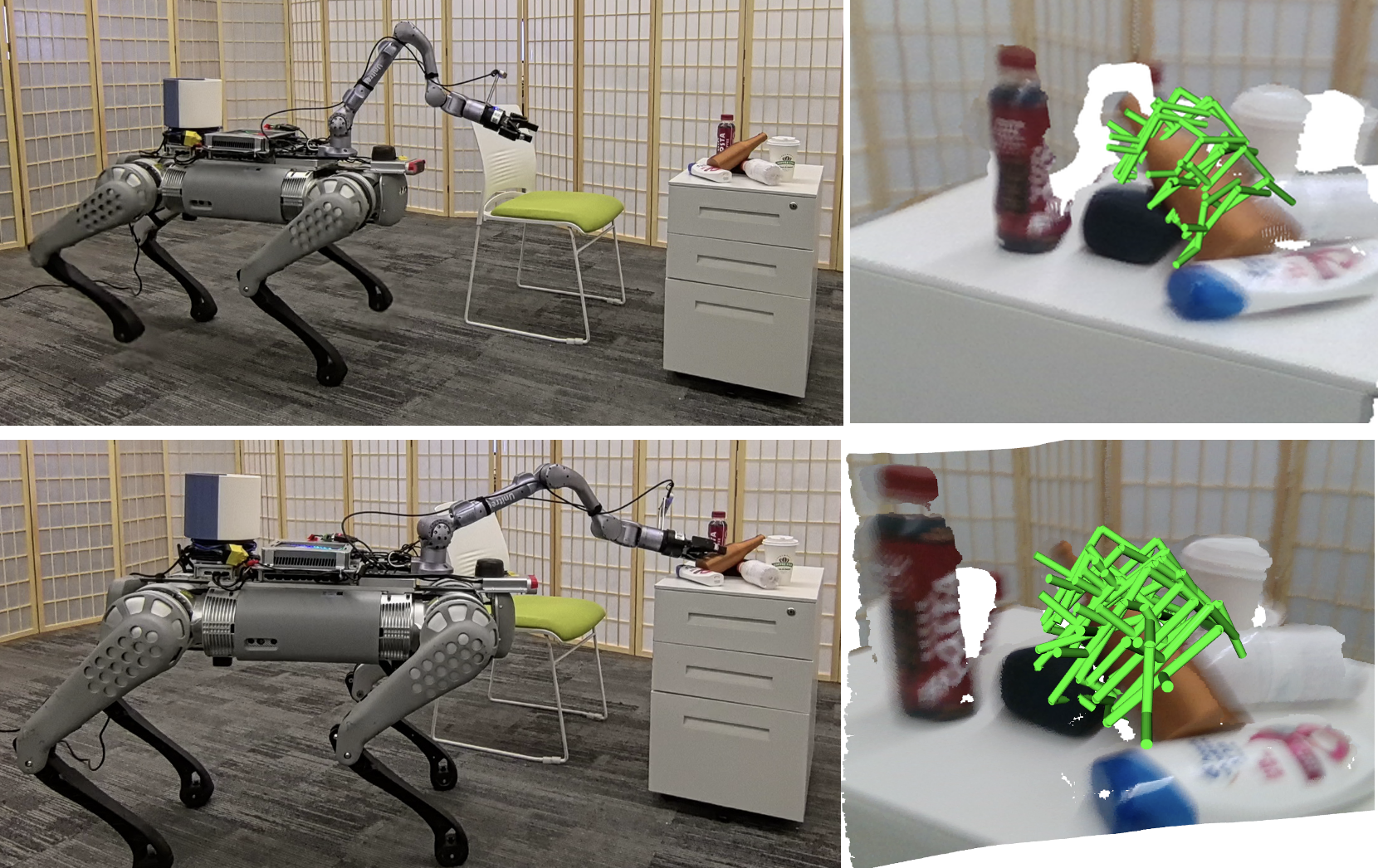

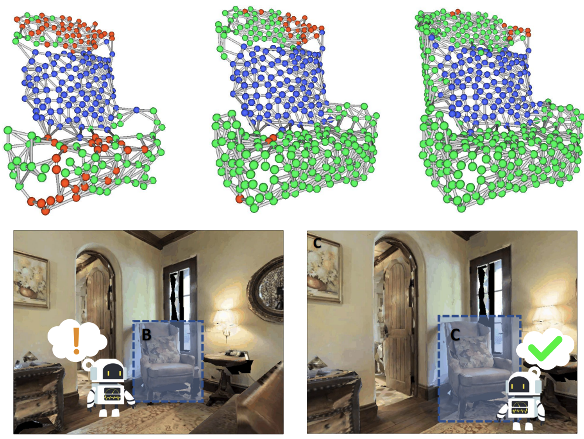

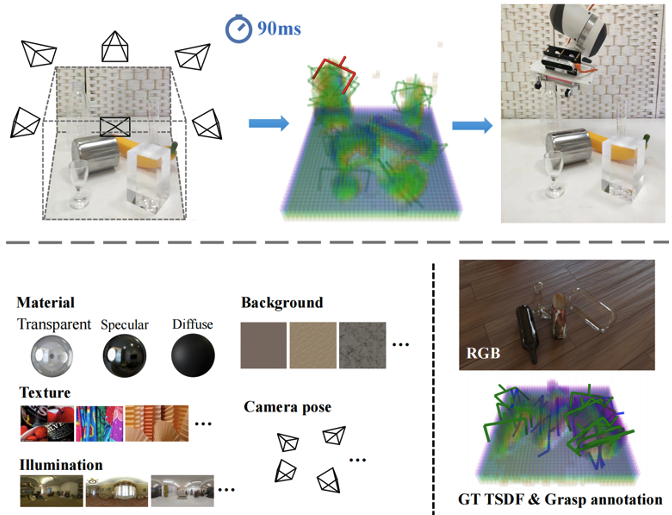

Jiazhao Zhang*, Nandiraju Gireesh*, Jilong Wang, Xiaomeng Fang, Chaoyi Xu, Weiguang Chen, Liu Dai, He Wang† ICRA 2024 Paper / Code / Project page We propose a graspability-aware mobile manipulation approach powered by an online grasping pose fusion framework that enables a temporally consistent grasping observation. |

|

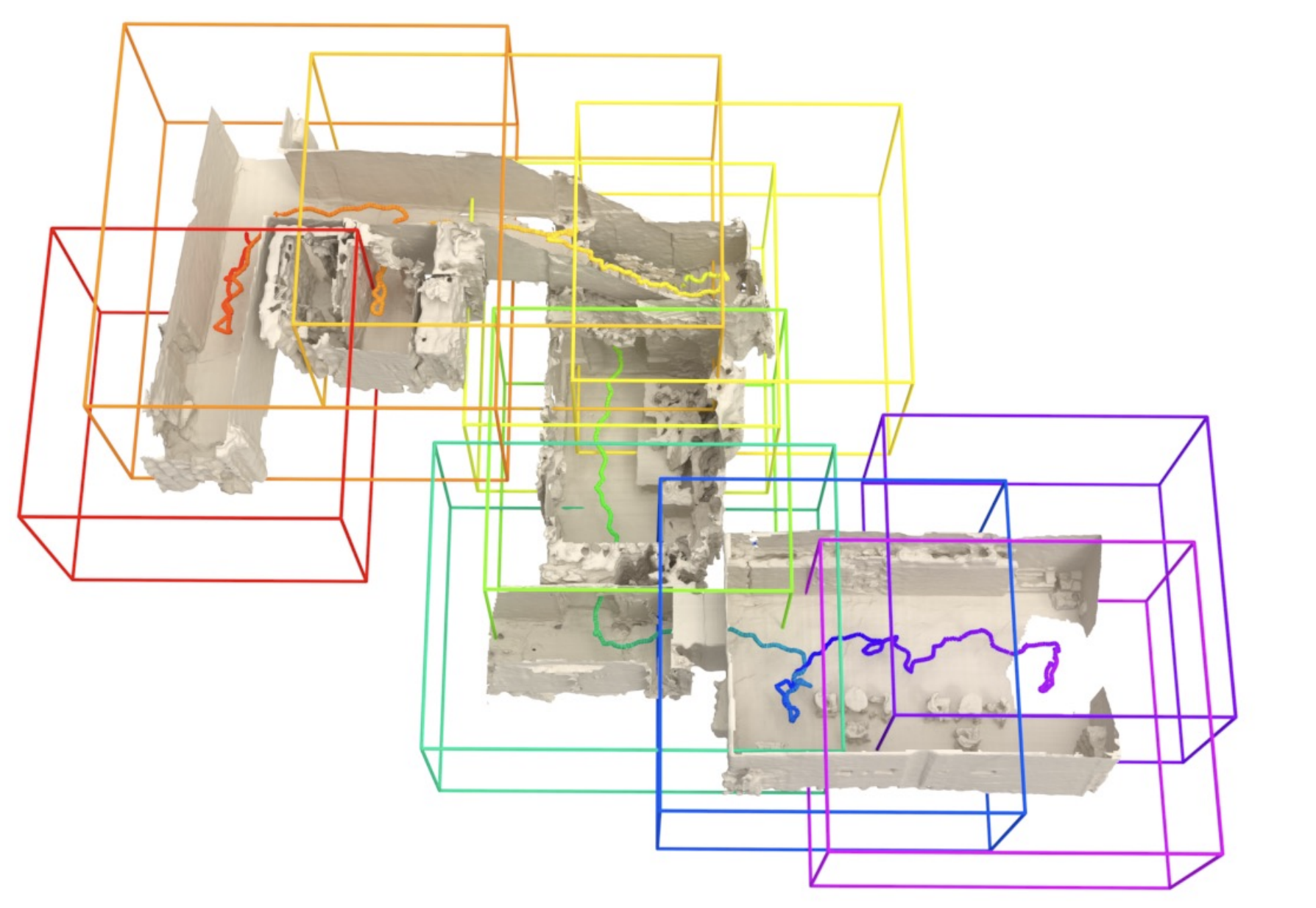

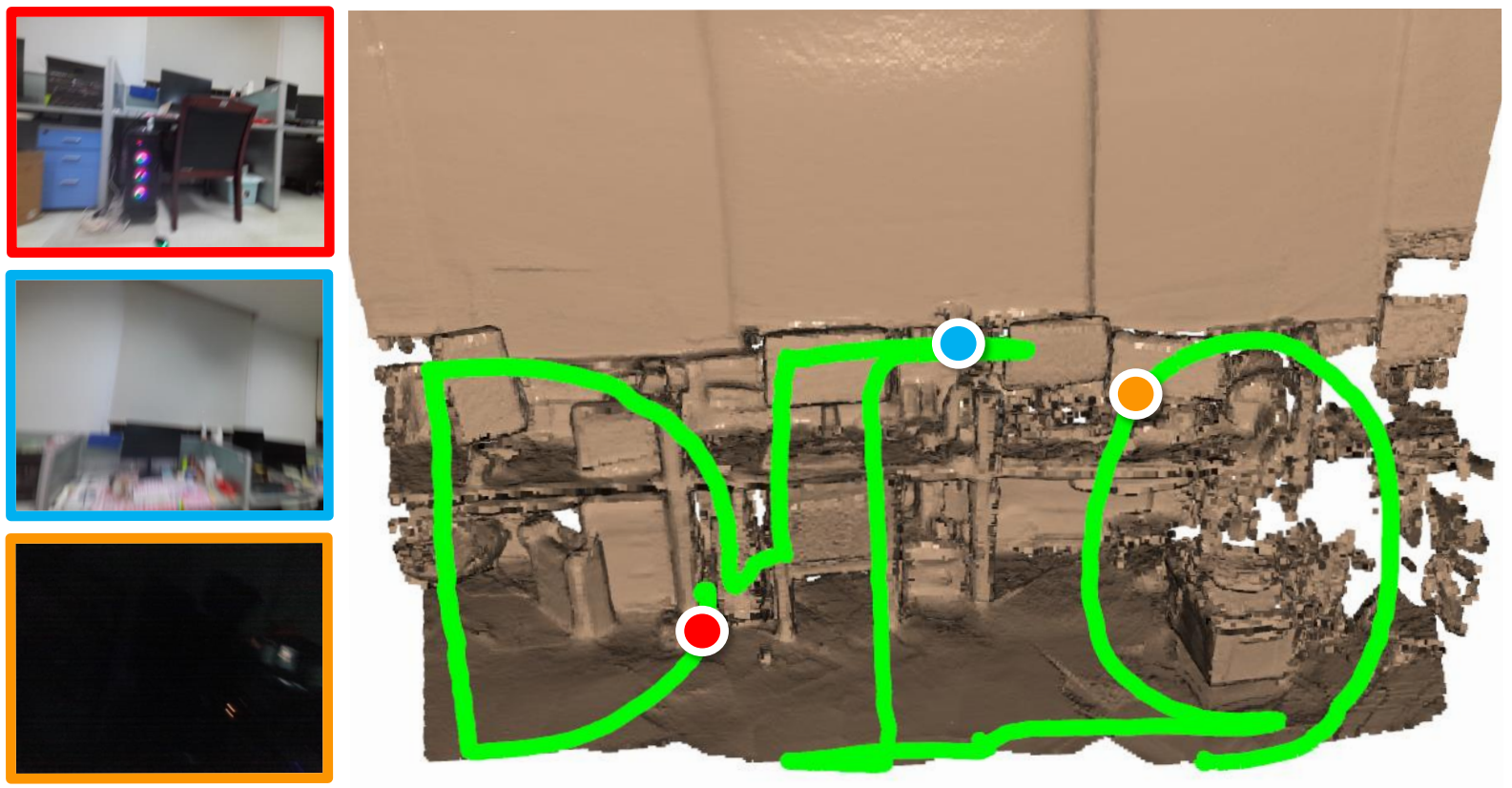

Yijie Tang*, Jiazhao Zhang*, Zhinan Yu, He Wang, Kai Xu† ACM Transactions on Graphics (SIGGRAPH Asia 2023) Paper / Code We introduce MIPS-Fusion, a robust and scalable online RGB-D reconstruction method based on a novel neural implicit representation – multi-implicit-submap. |

|

Jiazhao Zhang* , Liu Dai*, Fanpeng Meng, Qingnan Fan, Xuelin Chen, Kai Xu, He Wang† CVPR 2023 Paper / Code / Project page We propose a framework for the challenging 3D-aware ObjectNav based on two straightforward sub-policies, namely corner-guided exploration policy and category-aware identification policy. |

|

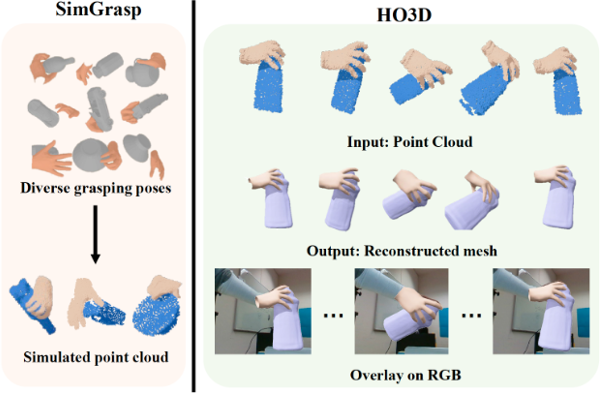

Qiyu Dai*, Yan Zhu*, Yiran Geng, Ciyu Ruan, Jiazhao Zhang, He Wang† ICRA 2023 Paper / Code & Data / Project page |

|

Jiayi Chen*, Mi Yan*, Jiazhao Zhang, Yinzhen Xu, Xiaolong Li, Yijiang Weng, Li Yi, Shuran Song, He Wang† AAAI 2023 (Oral Presentation) Paper / Code & Data / Project page |

|

Jiazhao Zhang, Yijie Tang, He Wang, Kai Xu† Transactions on Robotics (T-RO 2022) Paper / Contact me for code permission To realize efficient random optimization in the 18D state space of IMU tracking, we propose to identify and sample particles from active subspace. |

|

Jiazhao Zhang, Chenyang Zhu, Lintao Zheng, Kai Xu† ACM Transactions on Graphics (SIGGRAPH 2021) Paper / Code & Data We propose to tackle the difficulties of fast-motion camera tracking in the absence of inertial measurements using random optimization. |

|

Jiazhao Zhang*, Chenyang Zhu*, Lintao Zheng, Kai Xu† CVPR 2020 Paper / Code & Data We propose a novel fusionaware 3D point convolution which operates directly on the geometric surface being reconstructed and exploits effectively the inter-frame correlation for high quality 3D feature learning. |

|

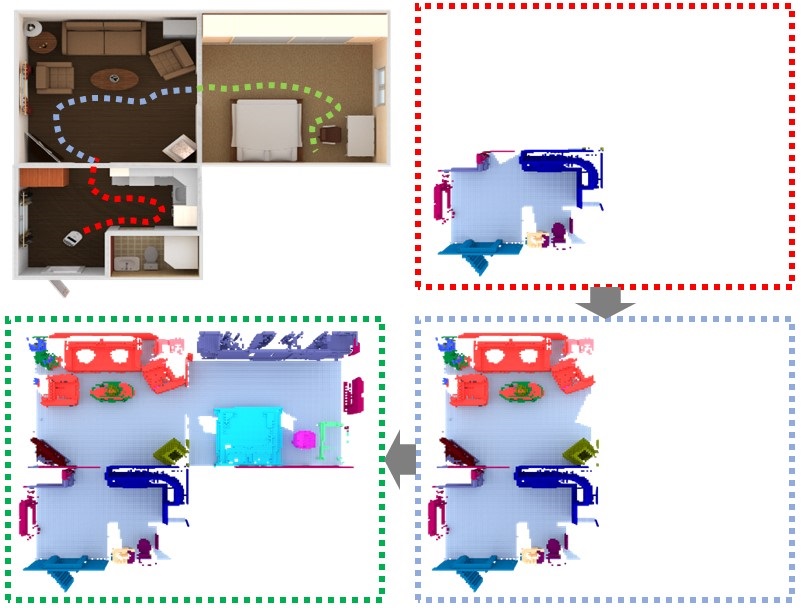

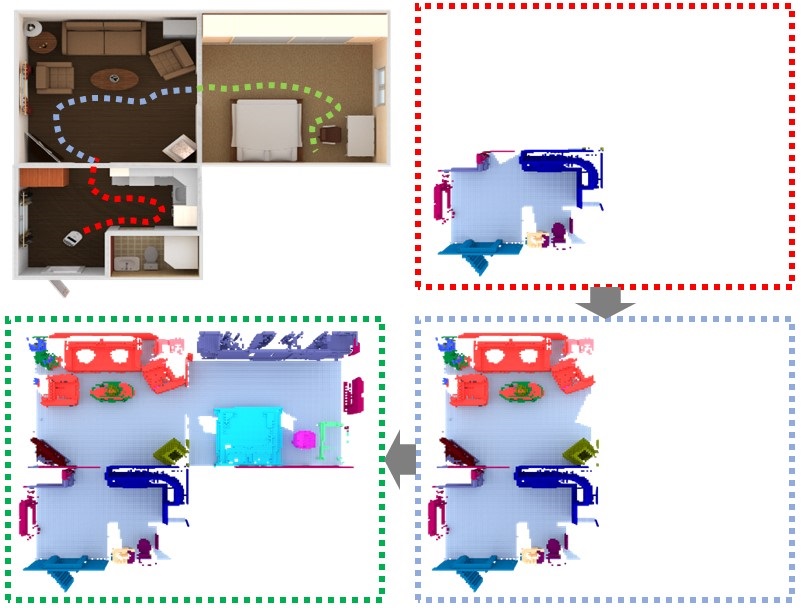

Lintao Zheng, Chenyang Zhu, Jiazhao Zhang, Hang Zhao, Hui Huang, Matthias Niessner, Kai Xu† Computer Graphics Forum (Pacific Graphics 2019) Paper We propose a novel approach to robot-operated active understanding of unknown indoor scenes, based on online RGBD reconstruction with semantic segmentation. |

|

|

|

Conference Reviewer: RSS, ICCV, NeurIPS, CVPR, ICLR, CoRL, ICRA, IROS Journal Reviewer: TPAMI, TIP, RA-L |